Dense Network Pruning using Neural Collapse under Imbalanced Dataset

Overview

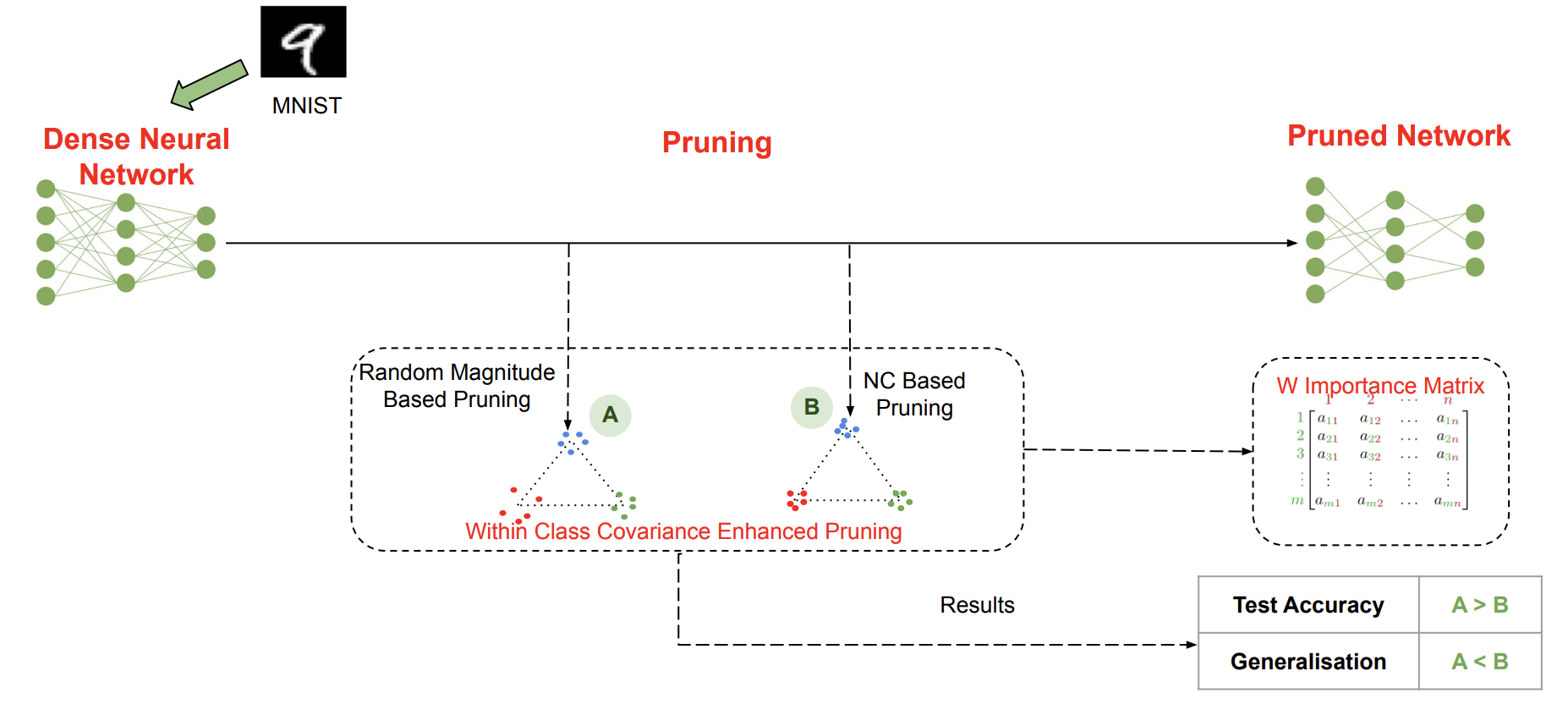

This project implements a novel pruning technique inspired by Neural Collapse (NC) geometry to enhance the robustness of pruned neural networks, particularly in imbalanced datasets. The proposed method utilizes the within-class scatter matrix to maintain class-separability during pruning, ensuring that minority class features are retained. This approach addresses the bias introduced by traditional pruning methods, which tend to favor majority classes in imbalanced data.

Key Features

- Neural Collapse-Inspired Pruning: A pruning technique using the within-class scatter matrix to preserve the class-separability in the pruned network.

- Bias Mitigation: Reduces bias towards majority classes in imbalanced datasets by maintaining minority class features during pruning.

- Robustness Testing: Includes robustness testing against noisy data using perturbation sensitivity to assess model robustness.

Research Questions

- Can Neural Collapse-inspired pruning improve the performance of pruned networks under imbalanced datasets?

- Does this pruning method enhance the robustness of the model?

Contributions

- Analysis of pruned neural network performance under various pruning techniques.

- Introduction of a pruning algorithm based on feature space geometry inspired by Neural Collapse.

- Evaluation of robustness and generalization in pruned networks.

Proposed Solution